LAMMPS & Kokkos: Using Kokkos in LAMMPS to enable performance portable molecular dynamics simulations across scales of accuracy, length, and time

The science

LAMMPS (Large-scale Atomic/Molecular Massively Parallel Simulator) is a classical molecular dynamics simulation that is important for materials modeling. LAMMPS is a critical code within the DOE Office of Science (SC), funded over many years, in part by the DOE SC Advanced Scientific Computing Research (ASCR) and Biological and Environmental Research (BER) programs. Widely used across many physics domains, LAMMPS can be applied to solid-state materials such as metals and semiconductors, as well as to soft matter such as biomolecules and polymers. It can be used to model systems at the atomic, meso, or continuum scale.

Additionally, LAMMPS is designed to run on systems ranging from single processors to the largest accelerator-based supercomputers. To achieve extreme scales, LAMMPS relies on the Kokkos performance portability library.

The enabling software: Kokkos

Kokkos is a performance-portability ecosystem, written primarily in C++, that provides abstraction layers to enable efficient execution across diverse computer architectures, including CPUs, GPUs, and emerging data flow devices from various vendors. Kokkos also provides data abstractions to change the memory layout of data structures, such as 2D and 3D arrays, to optimize performance on different hardware.

LAMMPS documentation includes extensive details on using Kokkos as the accelerator package to improve LAMMPS performance.

Long-term funding from both the DOE Office of Science and the DOE National Nuclear Security Administration (NNSA) has contributed to the success of Kokkos and, by extension, its clients, such as LAMMPS.

Kokkos supports LAMMPS by targeting the full range of possible backend programming models used by LAMMPS: CUDA, HIP, SYCL, HPX, OpenMP, and C++ threads, allowing applications such as LAMMPS to run on all major HPC platforms.

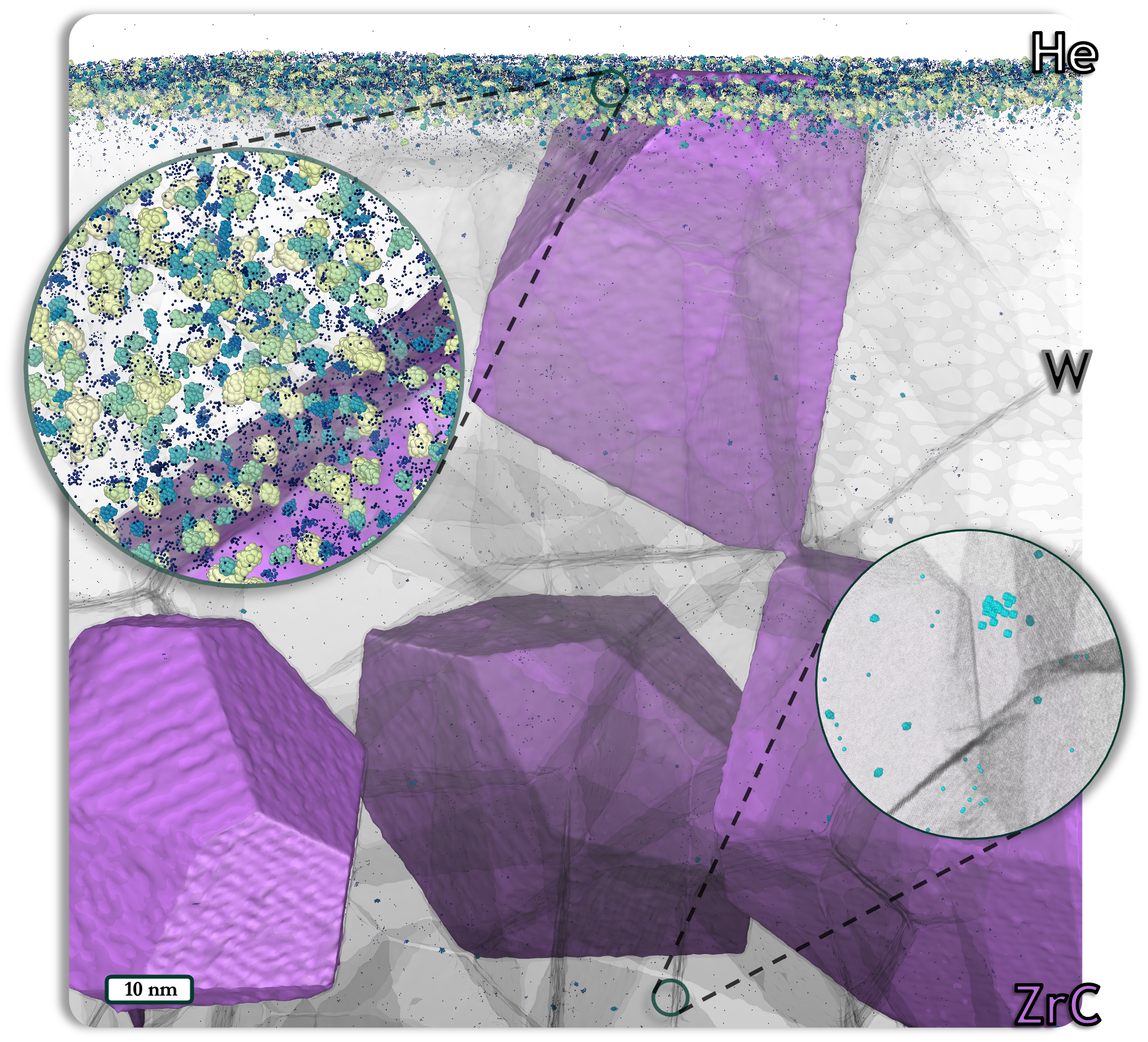

The billion-atom simulation (Ngyen-Cong, et al. 2021) shown in the second figure uses a machine learned model for the descriptions of interatomic bonding. Versions of this simulation have been run, for example, on a small local cluster of NVidia H100 GPUs, on Oak Ridge National Laboratory’s (ORNL) Summit V100 GPUs, and on ORNL’s Frontier exascale supercomputer using AMD MI250X GPUs.

The LAMMPS/Kokkos integration exemplifies the benefits of collaboration across the Department of Energy. During the Exascale Computing Project (ECP), LAMMPS was funded through the Office of Science (SC) EXAALT project, while Kokkos was funded through both SC and the NNSA. Open science codes and NNSA mission-related codes across the DOE scientific landscape leverage Kokkos’ core abstractions for parallel execution and data management. Increasing Kokkos adoption by targeting both SC and NNSA applications promotes Kokkos’ sustainability by broadening its user and developer base. A broader user base, for example, provides incentives to develop more extensive documentation, tutorials, and a larger web presence, as demonstrated by the Kokkos homepage.

The long-term sustained funding of Kokkos to support both Office of Science codes and NNSA mission work signals to the external community that they can trust that Kokkos will be supported for years to come, making it easier for teams to adopt Kokkos and for SC to trust that sponsorship of Kokkos-related projects will be part of a robust ecosystem. Multiple funding sources for Kokkos improve sustainability and resilience to funding changes. This robust funding and the expectation to support external users provide resources and motivation that enable a user-focused mindset and encourage projects to pursue outward-facing indicators of sustainability, such as Kokkos’ recent OpenSSF Best Practices Passing badge and the Kokkos team’s efforts to establish and join the High Performance Software Foundation.

Additional resources

LAMMPS citations:

LAMMPS - a flexible simulation tool for particle-based materials modeling at the atomic, meso, and continuum scales, A. P. Thompson, H. M. Aktulga, R. Berger, D. S. Bolintineanu, W. M. Brown, P. S. Crozier, P. J. in ‘t Veld, A. Kohlmeyer, S. G. Moore, T. D. Nguyen, R. Shan, M. J. Stevens, J. Tranchida, C. Trott, S. J. Plimpton, Comp Phys Comm, 271 (2022) 10817. https://doi.org/10.1016/j.cpc.2021.108171

K. Nguyen-Cong, J. T. Willman, S. G. Moore, A. B. Belonoshko, R. Gayatri, E. Weinberg, M. A. Wood, A. P. Thompson, and I I. Oleynik. 2021. Billion atom molecular dynamics simulations of carbon at extreme conditions and experimental time and length scales. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis (SC ‘21). Association for Computing Machinery, New York, NY, USA, Article 4, 1–12. https://doi.org/10.1145/3458817.3487400

Software mentioned: Kokkos

CASS members supporting the software: S4PST

Authors: Terece L. Turton, Michael Heroux, David E. Bernholdt, and Lois Curfman McInnes

Acknowledgement: the LAMMPS team

Published: January 7, 2026