Whole Device Modeling of Magnetically Confined Fusion Plasma

The science

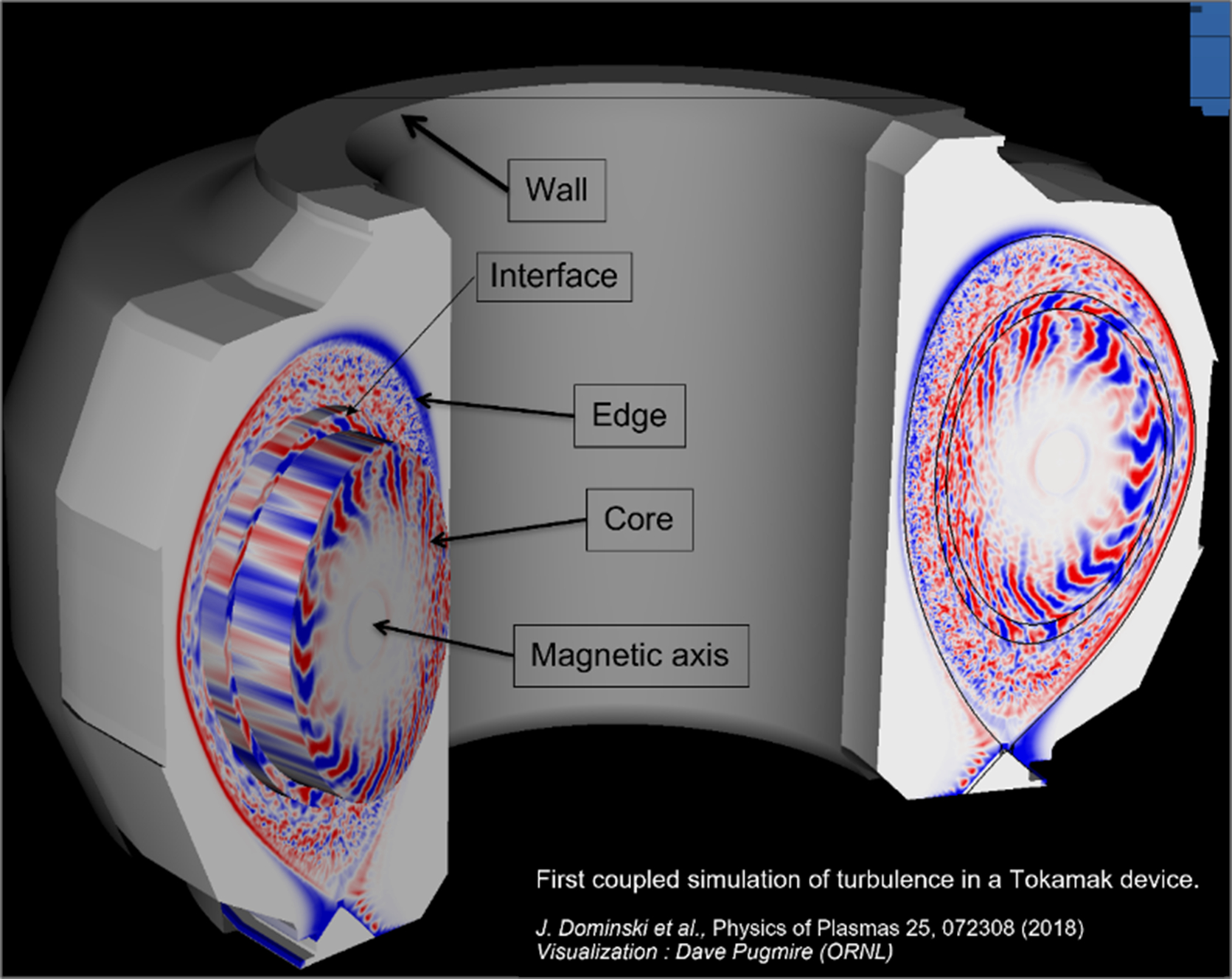

Magnetically confined fusion plasmas are being designed within the International Tokamak Experimental Reactor (ITER) and other projects that are based on physics regimes achieved through present experiment. First-principles-based modeling and simulation activities are required to design and optimize these new facilities in the possibly new physics regimes. The fusion community is developing an approach to first-principles-based whole device modeling that will provide predictive numerical simulations of the physics required for magnetically confined fusion plasmas to enable design optimization and fill in the experimental gaps for ITER and future fusion devices.

The enabling software

Coupled magnetic fusion simulation codes rely on a wide range of other software to deliver scientific capabilities. The following highlights software from the CASS scientific software ecosystem used by the WDMApp project, which was part of the DOE Exascale Computing Project.

Mathematical libraries (FASTMath)

WDMApp build options bring in the xSDK in order to use many of the FASTMath libraries. Math libraries in the CASS ecosystem that are used by WDMApp codes, either by default or as variant options, include PETSc/TAO, hypre, SuperLU, AMReX, MFEM, SUNDIALS, Kokkos Kernels, STRUMPACK, libEnsemble.

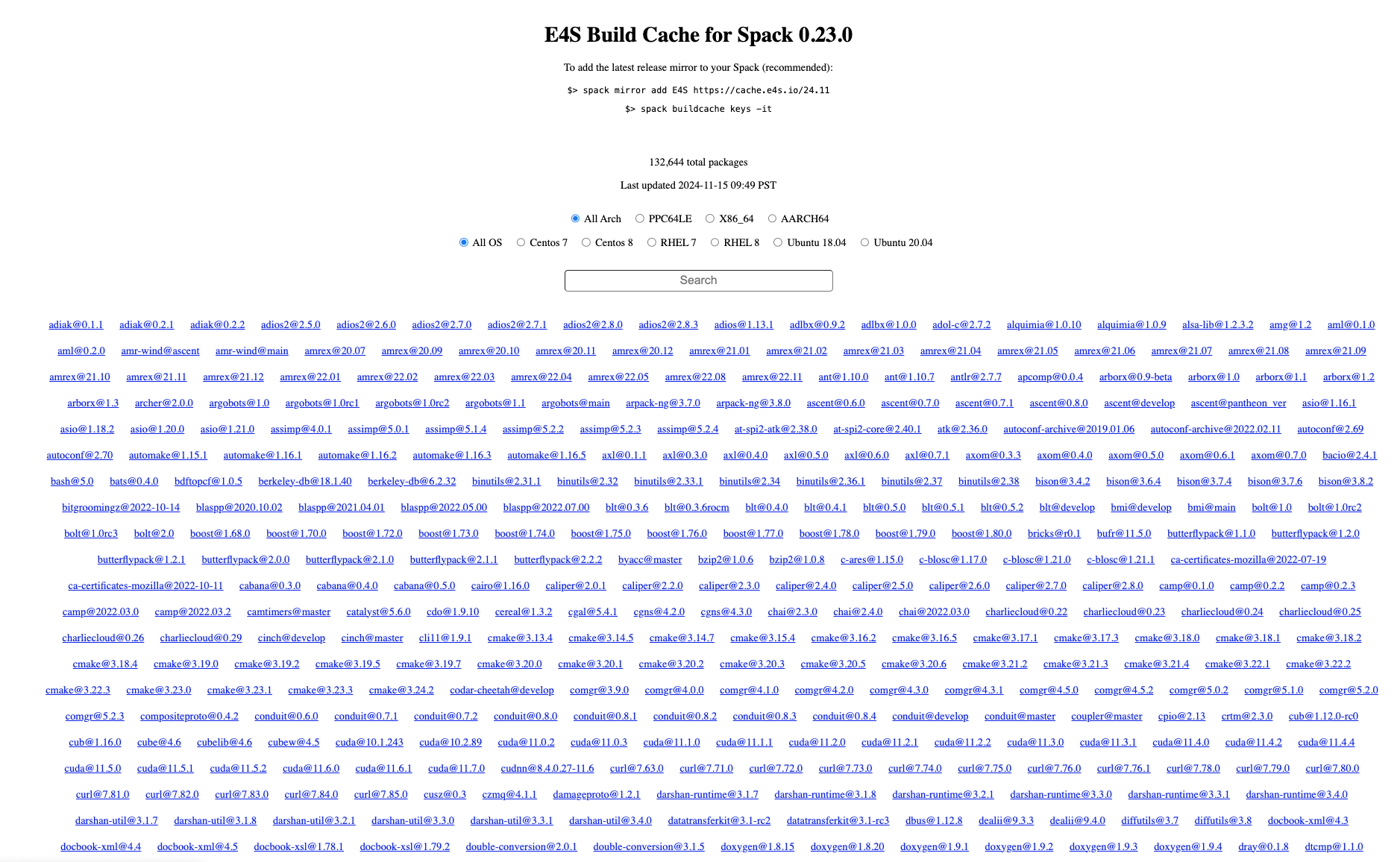

Software ecosystem and delivery (PESO)

Leveraging Spack and E4S binaries is one of the options for WDMApp builds. Building a code requires converting the source code into machine-readable instructions. For a simulation as complex as WDMApp, this means bringing in many software libraries – a process that can take a significant amount of time. Using a prebuilt binary cache means that many of the necessary libraries have already been compiled. Thus the E4S approach allows developers and users to take use the build caches, pre-built containers, and installations at a variety of HPC facilities in order to speed up the compilation process. WDMApp has achieved significant speedups in build time via Spack and E4S.

Data and visualization (RAPIDS)

WDMApp leverages ADIOS for code coupling and fast I/O. HDF5 and PnetCDF are available as optional I/O technologies.

zfp compression is available through a Spack build option or can be used through a plugin to ADIOS.

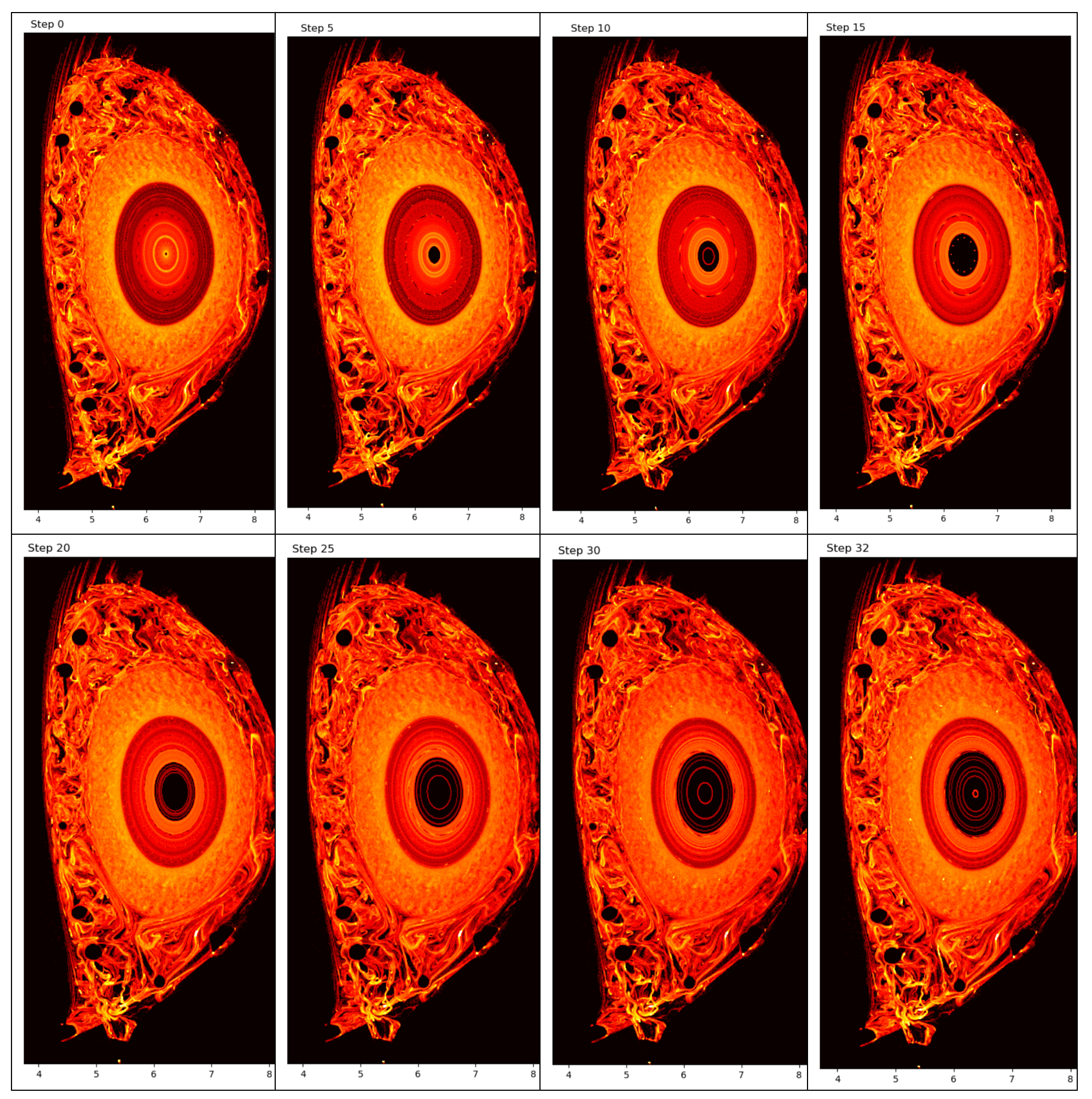

WDMApp leveraged Viskores (formerly known as VTK-m) to develop Poincare maps in its XGC code to plot the magnetic field perturbations in the tokamak, allowing scientists to see how the magnetic field topologies changes over time due to plasma turbulence. Use of Viskores significantly speeded up the Poincare calculations.

WDMApp also uses DIY to express some of its algorithms.

Programming models and runtimes (S4PST)

WDMApp targets heterogeneous HPC architectures using Kokkos as its performance portability model. Additionally, Kokkos is an optional backend for Viskores (formerly known as VTK-m) for GPU performance and can be leveraged by WDMApp simulations for efficient visualization tasks on the GPU.

WDMApp allows multiple options for MPI via build flags, including Open MPI and MPICH. OpenACC and OpenMP are available via WDMApp’s configuration options. The OpenMP Validation and Verification Test Suite and OpenACC Verification and Validation Test Suite, which can help users assess whether the compilers available to them provide adequate support.

Development tools (STEP)

TAU, HPCToolkit, PAPI and Darshan are available through the WDMApp Spack configuration file. These are available in the suite of development tools in the STEP CASS member organization and are designed to be used by scientific simulation codes for parallel high-performance application debugging and performance profiling.

Additional resources

WDMApp citation: J. Dominski, J. Cheng, G. Merlo, V. Carey, R. Hager, L. Ricketson, J. Choi, S. Ethier, K. Germaschewski, S. Ku, A. Mollen, N. Podhorszki, D. Pugmire, E. Suchyta, P. Trivedi, R. Wang, C. S. Chang, J. Hittinger, F. Jenko, S. Klasky, S. E. Parker, and A. Bhattacharjee, “Spatial coupling of gyrokinetic simulations, a generalized scheme based on first-principles,” Phys. Plasmas 28, 022301 (2021); DOI:10.1063/5.0027160

Software mentioned: PETSc/TAO, hypre, SuperLU, AMReX, MFEM, SUNDIALS, Kokkos Kernels, STRUMPACK, libEnsemble, Spack, E4S, ADIOS, HDF5, PnetCDF, zfp, Viskores, DIY, Kokkos, Open MPI, MPICH, OpenMP Validation and Verification Test Suite, OpenACC Verification and Validation Test Suite, TAU, HPCToolkit, PAPI, Darshan

CASS members supporting the software: FASTMath, PESO, RAPIDS, S4PST, STEP

Authors: Terry Turton, David E. Bernholdt, and Lois Curfman McInnes

Acknowledgement: Amitava Bhattacharjee, C.S. Chang and the WDMApp team

Published: January 24, 2025