WarpX Enables Computational Design of Next-Generation Plasma-Based Accelerators

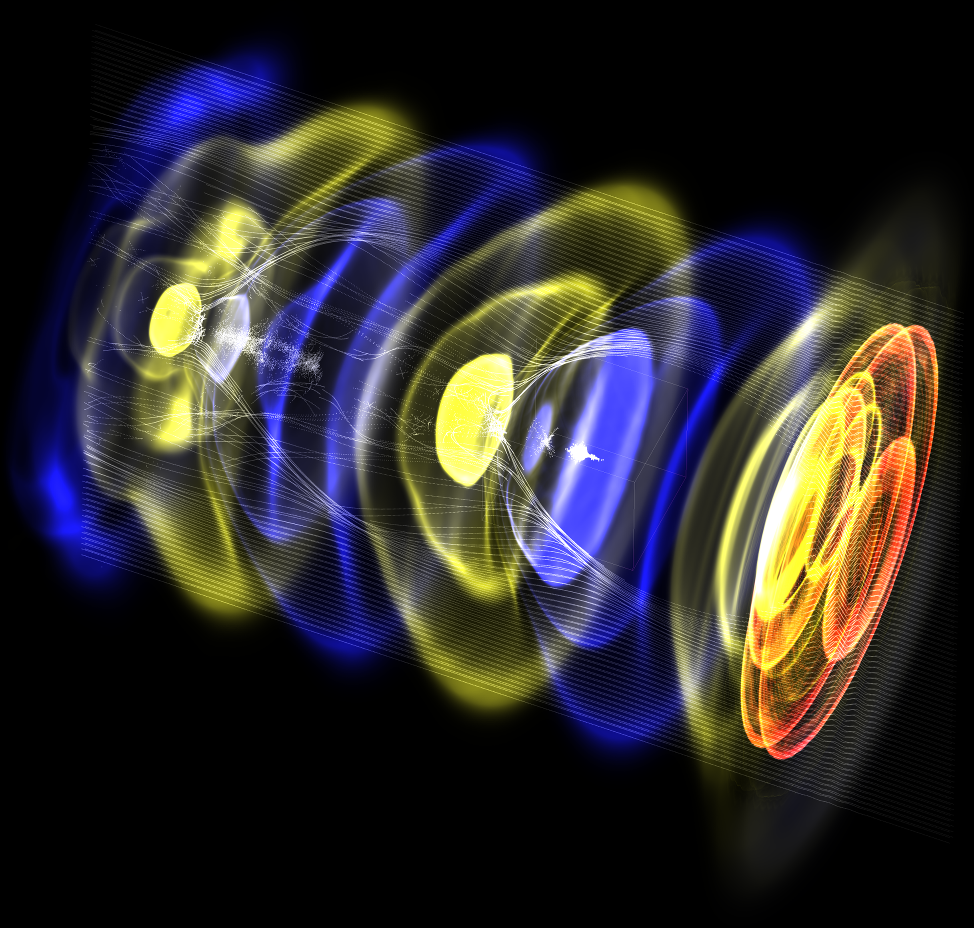

The science

WarpX is a particle-in-cell (PIC) simulation code that simulates particles that interact with each other, and with electromagnetic fields and surfaces. Originally developed within the Exascale Computing Project to model chains of plasma-based particle accelerators for future high-energy physics colliders, its domain of applications has broadened to other topics that include the modeling of other types of particle accelerators and beam physics, laser-plasma interactions, astrophysical plasmas, nuclear fusion energy and plasma confinement, plasma thrusters and electric propulsion, and microelectronics.

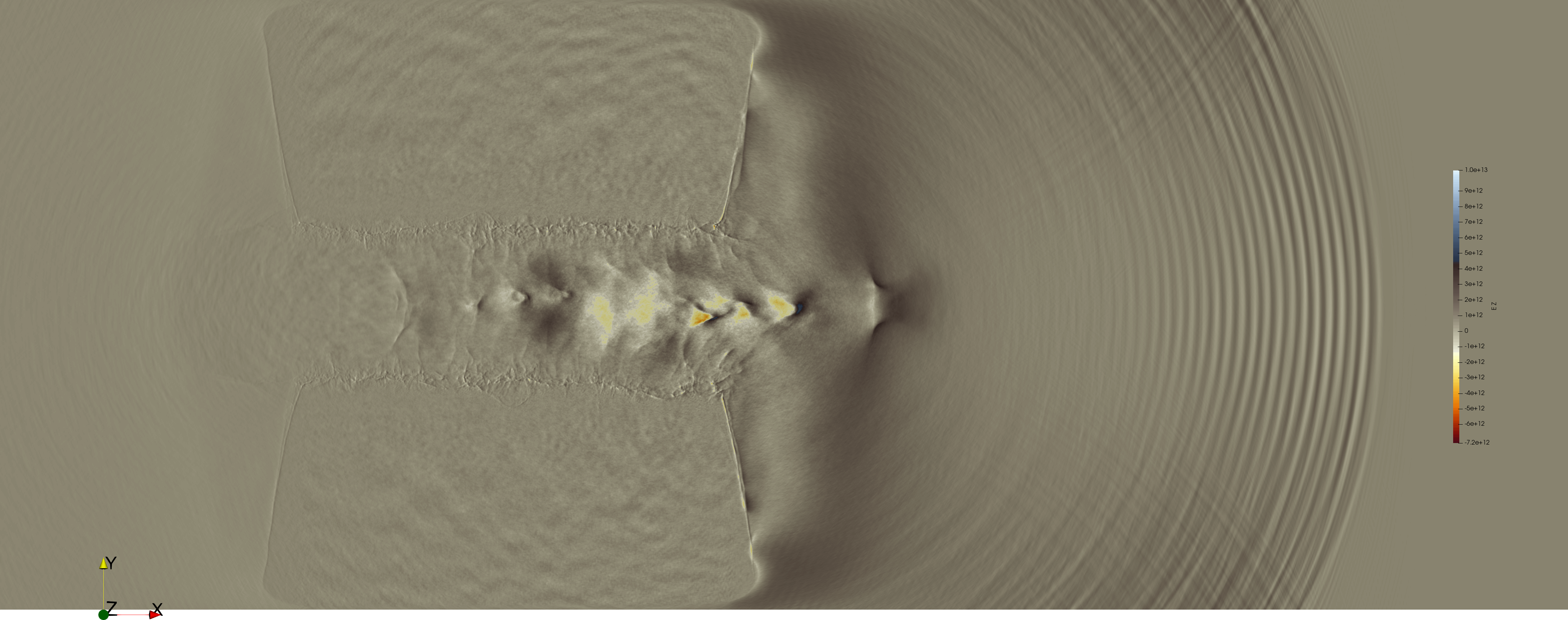

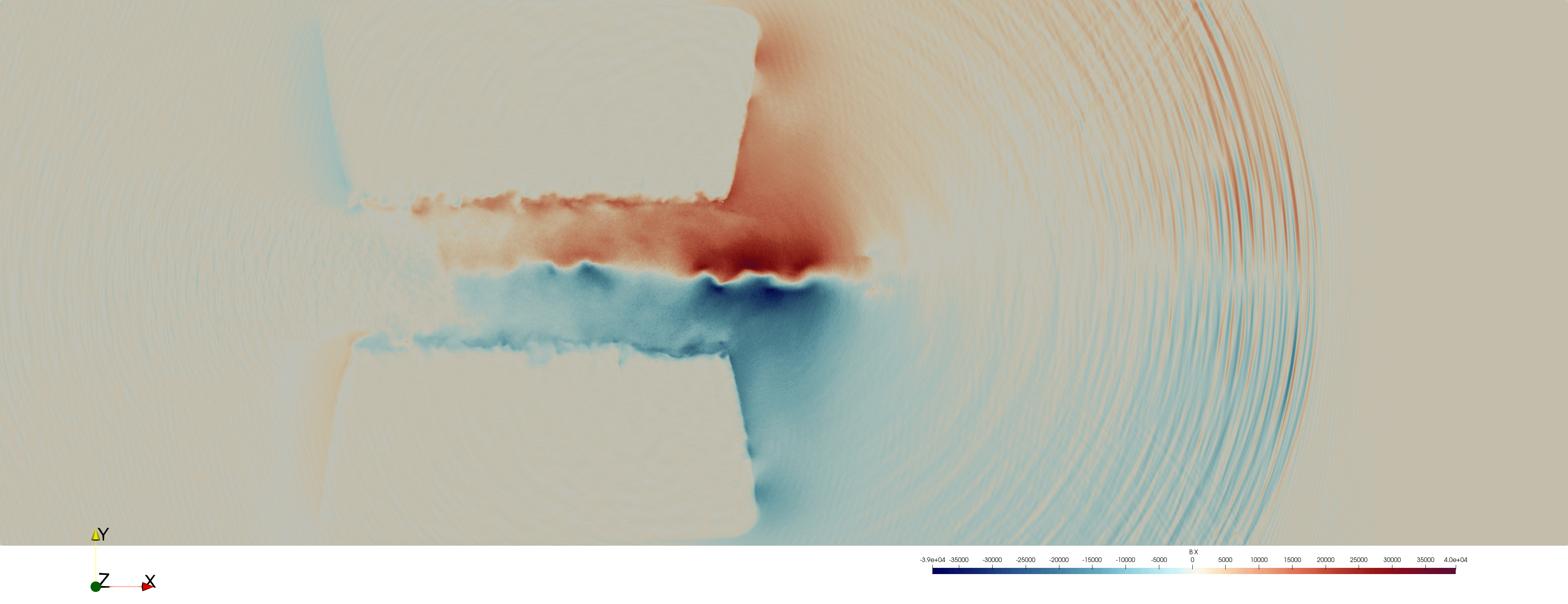

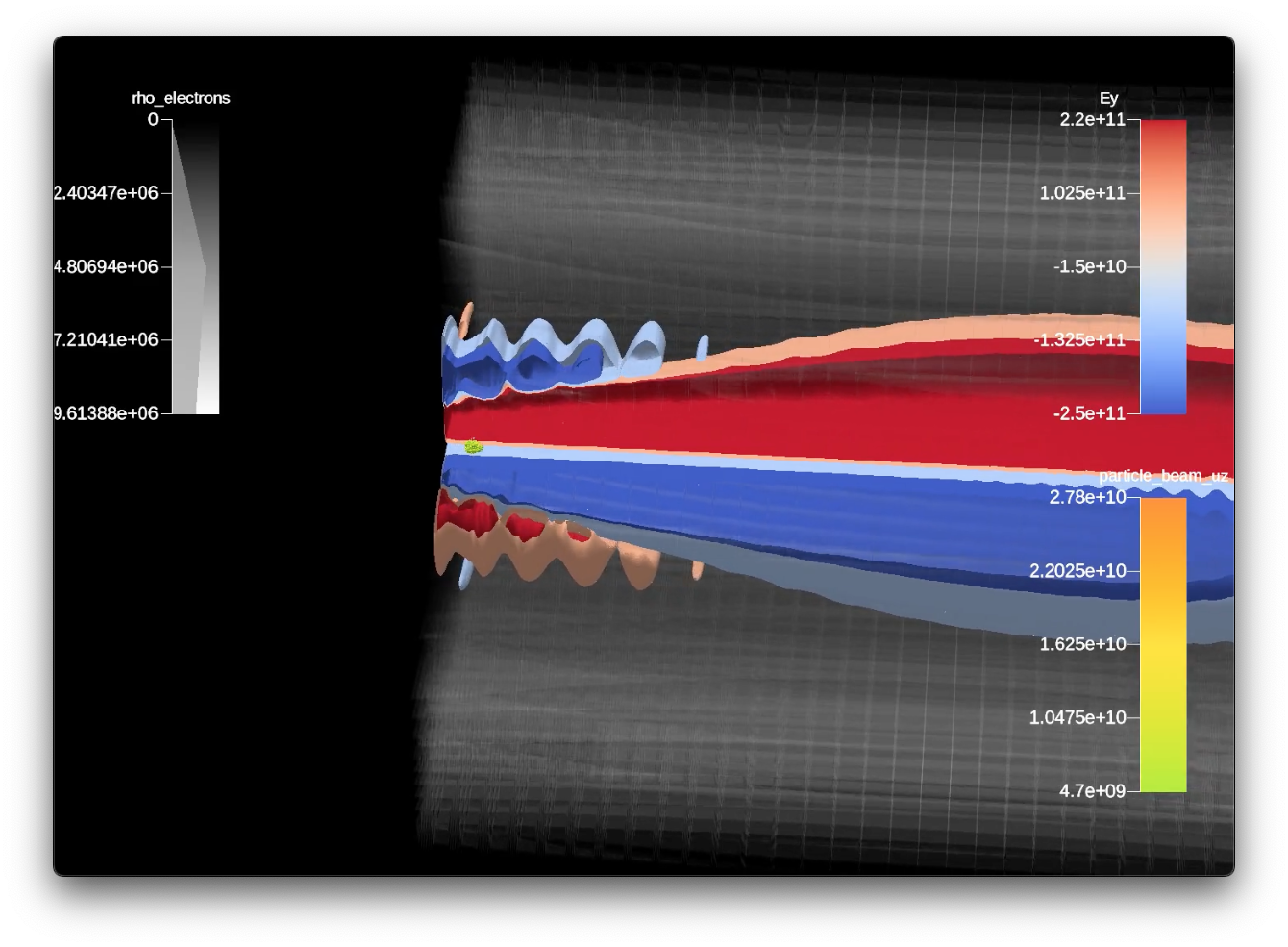

WarpX is a highly-parallel and highly-optimized code, which can run on GPUs and multi-core CPUs, and scales well from desktop systems to the world’s largest supercomputers to solve science problems at leading resolution and speed. Combining speed and scalability with techniques such as adaptive mesh refinement (AMR), the development and implementation of novel numerical solvers, advanced and freely-shaped (embedded) boundaries, and dynamic load balancing makes WarpX a unique PIC modeling framework. Through a user-friendly Python interface, WarpX is highly customizable, and facilitates seamless bindings to other codes and data science frameworks, achieving an effective synthesis of HPC and AI/ML.

WarpX was the 2022 winner of the Association for Computer Machinery (ACM) Gordon Bell Prize, a prestigious award recognizing outstanding achievement in high performance computing, requiring scientific innovation, innovation in the software implementation, and high performance. (WarpX Gordon Bell citation).

The enabling software

WarpX relies on a wide range of other software to deliver its scientific capabilities. The following highlights software from the CASS scientific software ecosystem used by WarpX.

Mathematical libraries (FASTMath)

WarpX utilizes a number of mathematical libraries and related tools, including AMReX for adaptive mesh refinement capabilities, and libEnsemble for parameter studies. These libraries are part of the Extreme-Scale Scientific Software Development Kit (xSDK), which provides enhanced interoperability among member libraries, making it easier for WarpX to incorporate additional numerical libraries when needed for future developments.

Software ecosystem and delivery (PESO)

The WarpX team relies on Spack to build the application as well as its many dependencies (WarpX Spack package) in a coordinated fashion. Moreover, WarpX, along with its dependencies, are part of the E4S software distribution (WarpX in the E4S documentation portal), allowing developers and users to take advantage of benefits such as build caches, pre-built containers, and installations at a variety of HPC facilities.

Data and visualization (RAPIDS)

WarpX supports many different output file formats, including openPMD-compatible I/O, which is a standardized metadata naming convention for particle-mesh data files. Through openPMD, WarpX leverages both HDF5 and ADIOS I/O libraries to support these various formats. ADIOS added OpenMP, CUDA and BP capabilities specifically to support WarpX. Through the openPMD backends HDF5 and ADIOS, WarpX users can make use of lossless and lossy compressors like zfp, c-blosc and SZ.

WarpX also has a broad base of users who use a variety of different tools to visualize and analyze the results of their simulations. Many of these products have been enhanced to better support WarpX, e.g., through adding readers to support the various WarpX I/O formats.

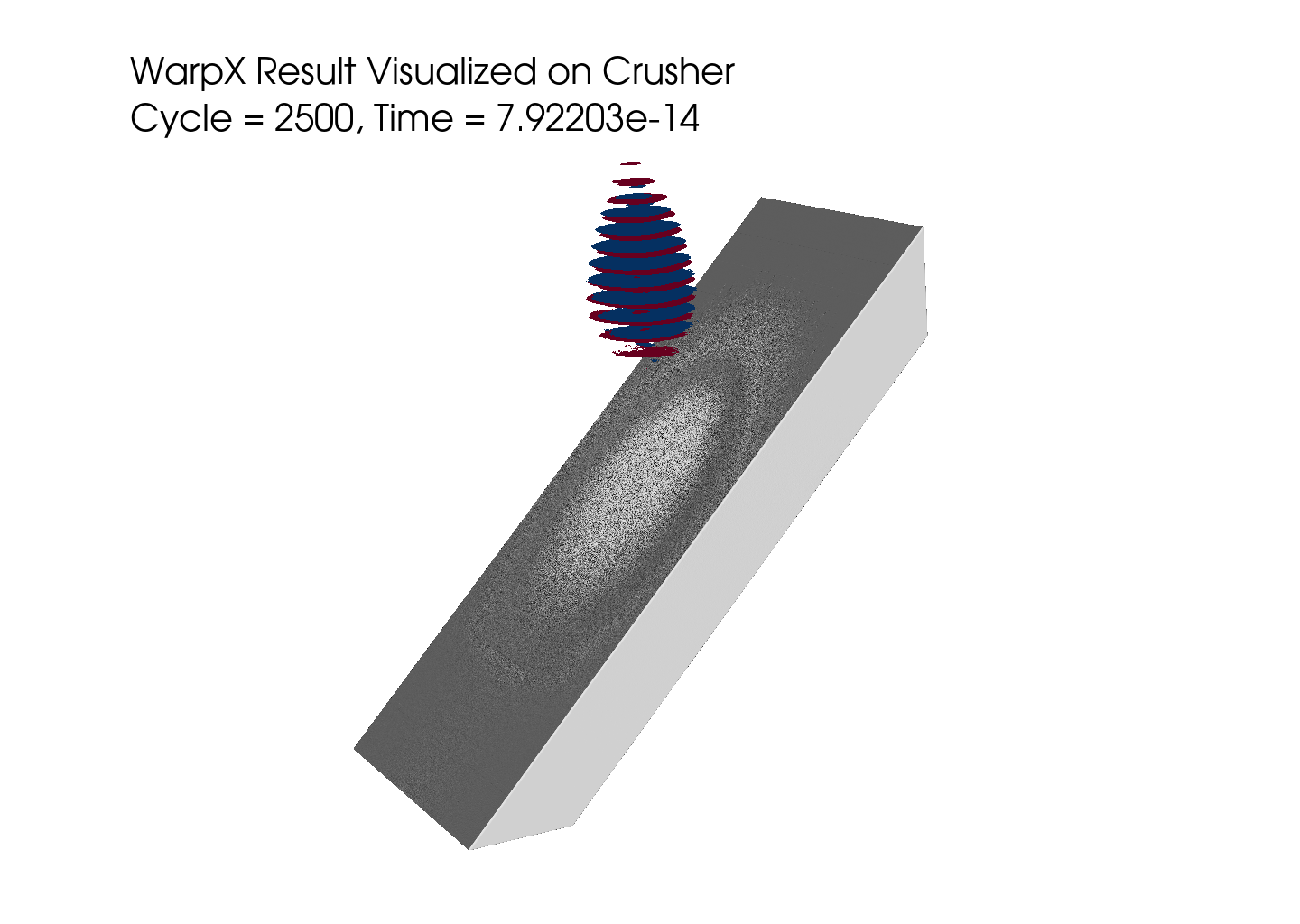

ParaView is a widely-used open-source post-processing visualization application, enabling WarpX users to visualize, analyze and explore simulation data. ParaView’s GPU capabilities benefit users such as WarpX. ParaView specifically developed an openPMD reader for WarpX. WarpX has also integrated the ParaView Catalyst API for in situ visualization to allow visualization and analysis tasks to execute while the simulation is running instead of having to wait until the simulation ends.

Ascent is another lightweight in situ visualization framework which WarpX has integrated with their code. WarpX was an early user of the Ascent Replay capability which allows a simulation code to easily and cheaply develop its visualization pipeline on low resolution data before running at high resolution.

VisIt is another widely-used post hoc visualization tool, similar to Paraview. VisIt also developed an openPMD reader for WarpX output files, along with GPU capabilities.

ParaView, Ascent and VisIt all leverage the Viskores (formerly known as VTK-m) visualization library to support their GPU capabilities.

Programming models and runtimes (S4PST)

Like most high-performance computational science and engineering applications, WarpX uses the Message Passing Interface (MPI), for inter-node parallelism. MPICH and Open MPI are implementations of the MPI standard which are used by many supercomputer vendors as the basis of their proprietary MPI implementations and can also be used directly.

Development tools (STEP)

Competing for a Gordon Bell Prize requires the best possible performance of the application. The WarpX team used multiple tools to analyze different aspects of application performance, including Darshan for I/O, TAU for tracing, and the Empirical Roofline Tool to understand how the achieved performance compares to the limits of the hardware. These tools, in turn, rely on tools like PAPI to access hardware performance counters.

Additional resources

WarpX citation: Fedeli L, Huebl A, Boillod-Cerneux F, Clark T, Gott K, Hillairet C, Jaure S, Leblanc A, Lehe R, Myers A, Piechurski C, Sato M, Zaim N, Zhang W, Vay J-L, Vincenti H. Pushing the Frontier in the Design of Laser-Based Electron Accelerators with Groundbreaking Mesh-Refined Particle-In-Cell Simulations on Exascale-Class Supercomputers. SC22: International Conference for High Performance Computing, Networking, Storage and Analysis (SC). ISSN:2167-4337, pp. 25-36, Dallas, TX, US, 2022. DOI:10.1109/SC41404.2022.00008

Software mentioned: AMReX, libEnsemble, Spack, E4S, HDF5, ADIOS, zfp, ParaView, ParaView Catalyst, Ascent, VisIt, Viskores, MPICH, Open MPI, Darshan, TAU, Empirical Roofline Tool, PAPI

CASS members supporting the software: FASTMath, PESO, RAPIDS, S4PST, STEP

Authors: Terry Turton, David E. Bernholdt, and Lois Curfman McInnes

Acknowledgement: Jean-Luc Vay, Axel Huebl, and the WarpX team

Published: January 21, 2025